EYE-EEG

Open source MATLAB toolbox for simultaneous eye tracking & EEG

EYE-EEG version 1.0 released

After more than ten years, it's probably time for a "version 1.0". Download it here.

unfold: A sister toolbox for EYE-EEG

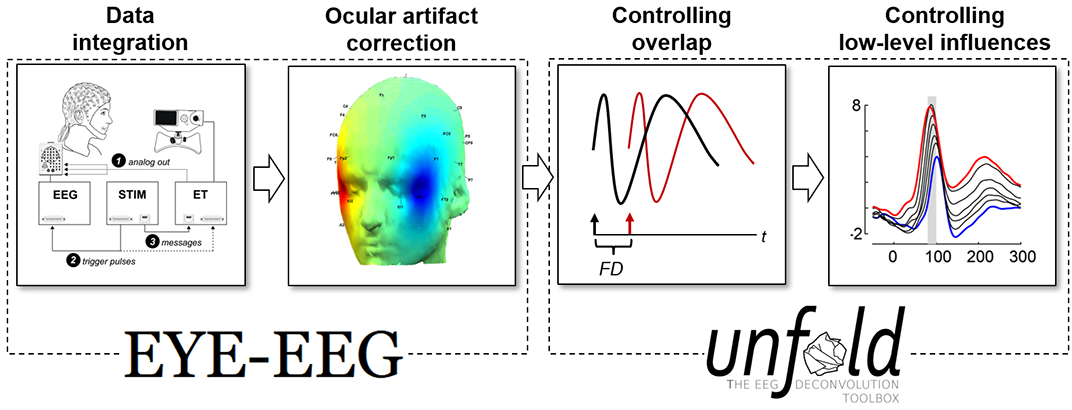

The EYE-EEG toolbox allows researchers to synchronize and integrate eye-tracking and EEG data, detect eye movements and correct for ocular artifacts. However, analyses of the EEG during free viewing also require users

to deal with two additional analysis problems: The temporal overlap between the neural responses produced by each eye fixation (which often differs systematically between conditions) and the fact that many low-level properties of the eye movement and the viewed

stimulus also strongly modulate this neural response. Fortunately, EYE-EEG now has a new "sister" toolbox that elegantly deals with both problems: unfold, a toolbox for (non)linear deconvolution modelling that is fully compatible with EYE-EEG and can directly read EYE-EEG datasets for further processing.

- Check out the unfold homepage...

- ...and the reference paper (open access) for more information

- ...and this open access paper for a detailed explanation on how nonlinear deconvolution supports combined ET/EEG analysis

What is the EYE-EEG toolbox?

The EYE-EEG toolbox is an extension for the open-source MATLAB toolbox EEGLAB developed to facilitate integrated analyses of electrophysiological and oculomotor data [1]. The toolbox parses, imports, and synchronizes simultaneously recorded eye tracking data and adds it as extra channels to the EEG.

Saccades and fixations can be imported from the eye tracking raw data or detected with an adaptive velocity-based algorithm [2]. Eye movements are then added as new time-locking events to EEGLAB's event structure, allowing easy saccade- and fixation-related EEG analysis (e.g., fixation-related potentials, FRPs). Alternatively, EEG data can be aligned to stimulus onsets and analyzed according to oculomotor behavior (e.g. pupil size, microsaccades) in a given trial. Saccade-related ICA components can be objectively identified based on their covariance with the electrically independent eye tracker [3].

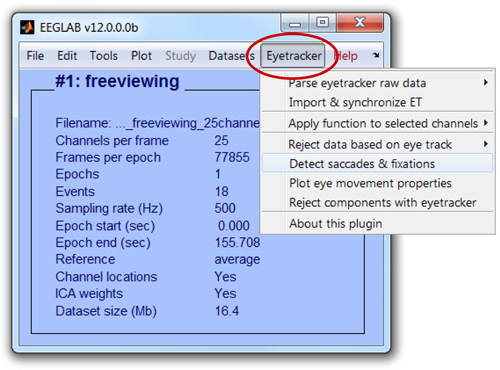

EYE-EEG adds a top-level menu called to EEGLAB. All functions can be accessed via this menu and are saved in EEGLAB's command history. Alternatively, functions can be called from the command line, providing advanced users with the option to use them in custom scripts. Using EEGLAB's export functions, integrated datasets may also be exported to other free toolboxes like Fieldtrip or Brainstorm.

EYE-EEG was written by Olaf Dimigen and Ulrich Reinacher (contributor until 2012).

Why combine eye tracking & EEG?

Everyday vision is an active process that involves making several saccades per second. In contrast, most EEG data is recorded during prolonged visual fixation. An alternative approach to signal analysis (for an overview see [1]), is to time-lock the EEG not to passive stimulus presentations, but to the on- or offsets of saccadic eye movements in more natural viewing situations (yielding saccade- and fixation-related potentials, SRPs/FRPs). However, recording precise eye movements together with the EEG is also useful for many other purposes. These include controlling fixation, detecting signal distortions from microsaccades (e.g., [4]), improving ocular artifact correction [1, 3], measuring saccadic reaction times, presenting stimuli gaze-contingently [5, 6], simultaneous pupillometry, or improving brain-computer interfaces.

Overview over functions

- parse raw eye tracking data, store it in MATLAB format

- synchronize eye track & EEG data based on common events

- add gaze position & pupil size as extra channels

- import saccades/fixations/blinks detected online by the eye tracker

- remove continuous or epoched data with out-of-range values in eye track

- control fixation, objectively reject blink artifacts

- apply existing EEGLAB functions (e.g. filters) only to EEG or eye track

- velocity-based (micro)saccade detection with relative thresholds (Engbert & Mergenthaler, 2006)

- add saccades & fixations as time-locking events to

- plot eye movement properties

- create "optimized" ICA training data (with overweighted spike potentials) for better artifact correction (Dimigen, 2020)

- automatically reject ocular ICs that covary with the electrically independent eye track

- use variance ratio criterion proposed by Plöchl et al. (2012)

- analyze fixation-related potentials (FRPs) in time or frequency domain

- directly relate EEG and oculomotor behavior (e.g., pupil size)

For details, please see the tutorial.

Requirements

Software

- MATLAB. The toolbox has been tested with MATLAB versions between 2010a and 2021b but other versions should work as well.

- EEGLAB. Freely available at http://sccn.ucsd.edu/eeglab. We recommend to use the latest version.

Currently, the toolbox reads text-converted raw data from eye trackers by SR Research (e.g., EyeLink™-series), Sensomotoric Instruments (e.g., iView X™ and RED™-series) and Tobii Pro (e.g. Tobii Pro TX-300 or Pro Spectrum). If your EEG and eye track are already synchronized (see Tutorial: How to connect eye tracker & EEG), the toolbox can also be used to further process data recorded with other eye tracking hardware. There are no known limitations regarding EEG hardware, since EEGLAB imports most EEG formats.

Download & Installation

- Go to the download page. Download a .zip archive (from Github) containing a folder with the toolbox files and a PDF of the reference paper

- Unzip and move the entire folder into EEGLAB’s "plugins" directory. Inside this directory, you should now have a subdirectory called "eye_eeg0.99".

- Start EEGLAB. It should automatically recognize and add the plug-in. You should see the following line appear in your MATLAB window:

>> EEGLAB: adding "eye_eeg" plugin version 0.99 (see >> help eegplugin_eye_eeg)

That's it. EEGLAB now has an additional menu called "Eyetracker":

Alternatively, you can download EYE-EEG from within the EEGLAB user interface using the EEGLAB extension manager. Or you can download it from the Github repository for EYE-EEG

Getting started

To get started, read the step-by-step tutorial or try out the toolbox with example data.

Citing the EYE-EEG toolbox

This is free software distributed under the GNU General Public License. However, we do ask those who use this program or adapt its functions to cite it in their work. Please refer to it as the "EYE-EEG toolbox" (or "EYE-EEG extension") and cite reference [1] below. if you use the saccade detection, please additionally cite reference [2] (note that EYE-EEG contains modifications and additional options for the saccade detection procedures, which go beyond those proposed in the original paper. It is therefore helpful if you clarify that you have used the implementations of these methods in EYE-EEG). If you select ICA components based on the variance ratio criterion, please make sure to cite references [3] and [7]. The reference for the optimized ICA procedure (OPTICAT) is reference [7]

Contact

For bug reports, feature requests, and all other feedback please email us. We would be happy to hear of any papers you have written or insights you have gained using EYE-EEG. We also welcome any collaboration in improving the tools, adding features, or supporting additional eye trackers. if the toolbox was useful to you, you can also buy the EYE-EEG team a coffee below:

References

- Dimigen, O., Sommer, W., Hohlfeld, A., Jacobs, A., & Kliegl, R. (2011). Coregistration of eye movements and EEG in natural reading: Analyses & Review. Journal of Experimenta Psychology: General, 140 (4), 552-572 [toolbox reference paper, PDF]Engbert, R., & Mergenthaler, K. (2006). Microsaccades are triggered by low retinal image slip. PNAS, 103 (18), 7192-7197Plöchl, M., Ossandon, J.P., & König, P. (2012). Combining EEG and eye tracking: identification, characterization, and correction of eye movement artifacts in electroencephalographic data. Frontiers in Human Neuroscience, doi: 10.3389/fnhum.2012.00278Dimigen, O., Valsecchi, M., Sommer, W., & Kliegl, R. (2009). Human microsaccade-related visual brain responses. J Neurosci, 29, 12321-31Dimigen, O., Kliegl, R., & Sommer, W. (2012). Trans-saccadic parafoveal preview benefits in fluent reading: a study with fixation-related brain potentials. Neuroimage, 62 (1), 381-393Kornrumpf, B., Niefind, F., Sommer, W., & Dimigen, O. (2016). Neural correlates of word recognition: A systematic comparison of natural reading and RSVP. Journal of Cognitive Neuroscience, 28:9, 1374-1391Dimigen, O. (2020). Optimizing the ICA-based removal of ocular EEG artifacts from free viewing experiments. NeuroImage, 207, 116117 [link]